Tag: chain-of-thought

I just want a ChatGPT to teach me something

OpenAI has a new model. But it's not GPT-5 (please, please, we just like how it feels increment a number!). It's called "o1-preview", and it's a GPT that's fine-tuned for chain-of-thought reasoning.

On one hand, chain-of-thought prompting has been possible all along with GPT-3+, and is widely used. Heck, you can find it on site called LearnPrompting.org.

But OpenAI has taken things a step further by (1) training this model to use chain-of-thought all the time and, (2) more importantly, hiding the chain-of-thought from users. We do get to see a kind of summary of the reasoning steps (at least in ChatGPT), but not the gory details of the LLM output. This might provide OpenAI some protection from other models training on o1 output, but it's a bummer.

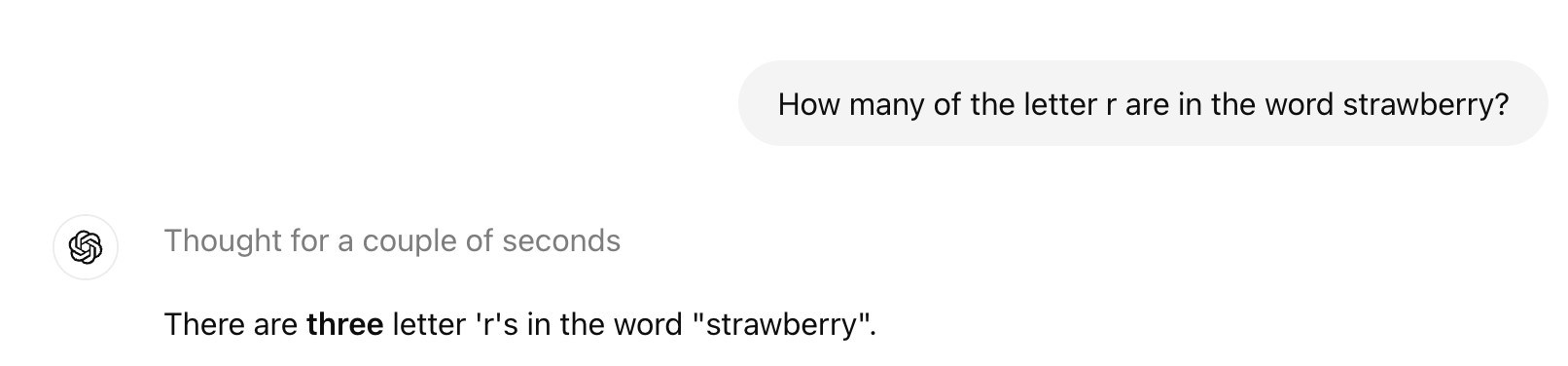

I don't have access to op1 via API, but I do have it in ChatGPT. And it has no problem with the classic strawberry problem (LLMs struggle here because tokenization swallows letter-counts):

So, at least we have that. I like to challenge these models to teach me something; let's try that.

[ Keep reading ]